1- WordLift — AI Powered plugin with smart SEO benefits

WordLift is a semantic plugin for WordPress. It brings the power of Artificial Intelligence technologies to help creators in producing richer content, organizing content around their audience’s needs, and promote it over the different search engines.

WordLift includes several features which can assist you in different tasks such as:

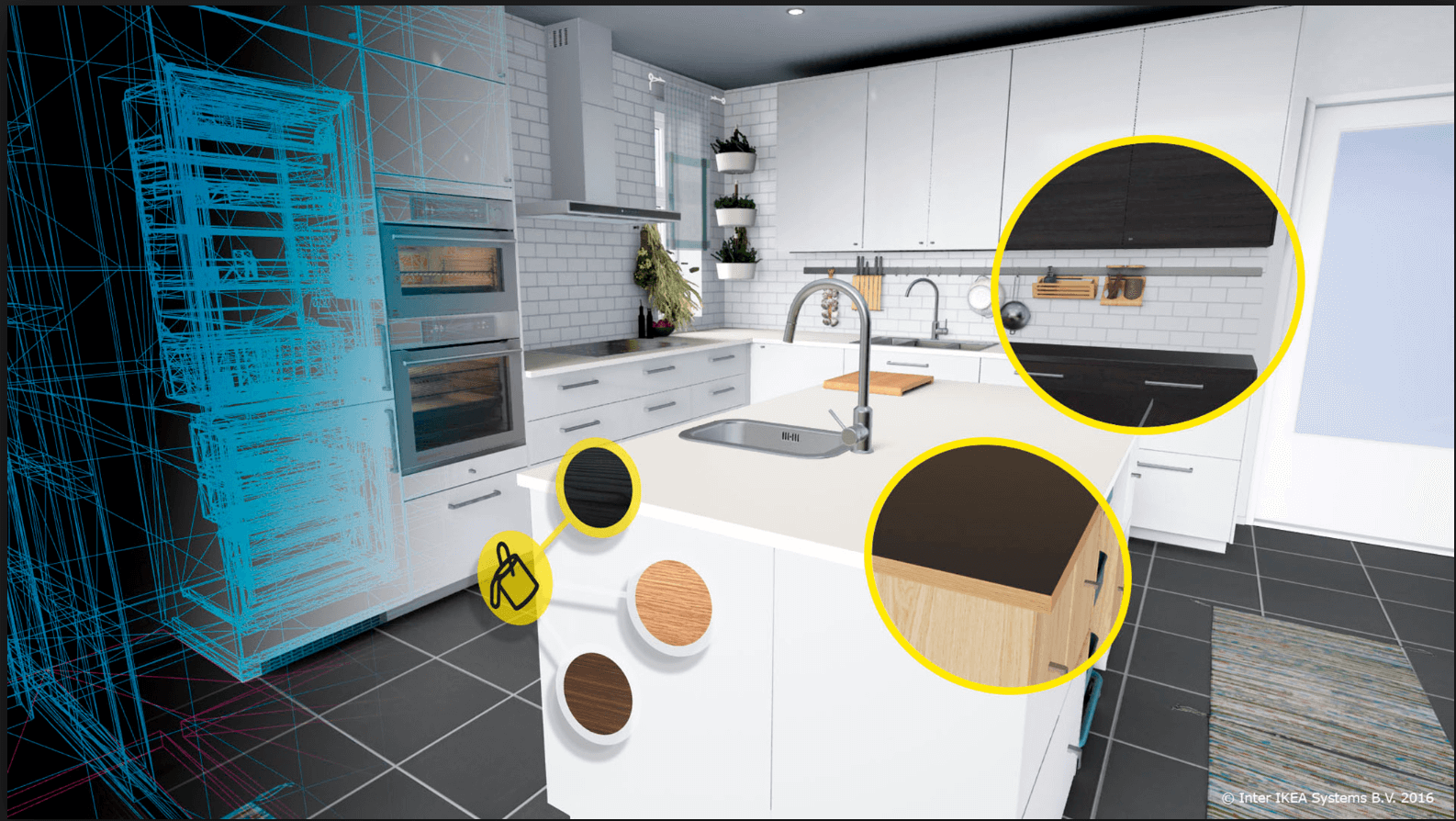

- Organizing your content by suggesting details and facts to provide context for your audience. It also adds a semantic markup to your text to assist machines in fully understanding your website

- Making an aesthetically pleasing website using imagery and data visualization tools such as maps and timelines, in order to engage readers in an immersive user experience

- Connect readers with deeper content and using data to find new ways to publish and monetize your content

- Use semantic content optimization to be more visible and understandable to search engines

- Engage more audience using relevant content and recommendations to elongate their time spent on your website.

2- The Facebook Pixel — tracking the behaviors of your website visitors

The purpose of The Facebook Pixel is to enable users to create custom audiences for their Facebook ads. This enables you to build re-targeting campaigns showing your catered ads to users while browsing Facebook with their phones or PCs. The importance of The Facebook Pixel lies in its ability to track your website visitors, no matter of “referral source.” Thus your re-targeting efforts will further support your marketing from other platforms. You are in an advantage, your website is on WordPress, since Facebook and WordPress have an integration that would enable you to install The Facebook Pixel easily.

Here are a few benefits to adding The Facebook Pixel to your WordPress website:

- Reaching the right target audience- the Facebook pixel helps you find leads on Instagram and Facebook through your re-targeting ads which reach customers who are actually interested in your message.

- Increase your audience size by building a similar audience equal in behavior to your website visitors to the closest 1% Facebook users in a geographic area.

- More data and information that helps you get a deep understanding of your Facebook community and their interactivity with your website

- Stimulate actions that matter to your business, like ads optimization for conversations or even page views.

3- Social Report — the full-featured WordPress scheduling tool

Social Report is much suitable for WordPress, as it enables you to write blog posts and publish them from one centrally focused social media dashboard. You can also track your WordPress blog post clicks from within the Social Report dashboard. Social Report plugin will help you use the mentioned analytics and others to optimize your social media while sharing these blog posts to harvest maximum benefits and generate more traffic.

Social Report can enhance your WordPress website through its many features, which include:

- Smart Social Inbox , where all your social messages across all of your social media profiles gathered into one organized stream.

- Advanced tools for social media posting, and scheduling bringing advanced social media publishing tools along with intuitive workflows to your website

- Social Media motoring and listening tools brand that help marketeers analyze social data for marketing insights

In the past couple of years,

In the past couple of years,

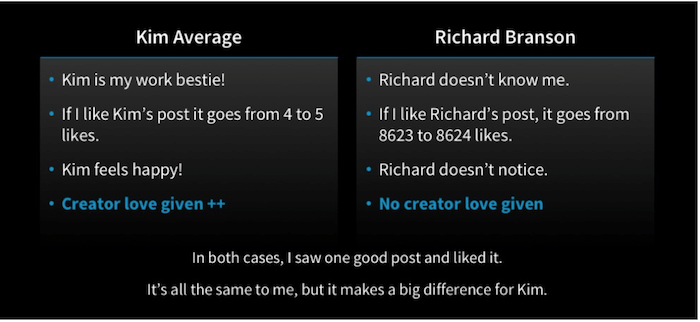

“Creator love” metrics describe how the creator feels about the viewer’s actions. Courtesy of linkedin blog

“Creator love” metrics describe how the creator feels about the viewer’s actions. Courtesy of linkedin blog